Project Fair 2025

The annual project fair of METU EEE capstone projects was held in the Culture and Convention Center on June 25th, 2025

Program

- 10:00-10:30 Opening Ceremony (Kemal Kurdaş Hall)

- 10:30–12:00 Exhibition (1’st session)

- 12:00–13:00 Lunch Break

- 13:00–15:00 Exhibition (2nd session)

- 15:00 Award Ceremony (Kemal Kurdaş Hall)

Groups & Projects

The projects of this year’s participant groups are given below. You can click on group names provided in the table to reach detailed information about a specific group.

Auralis

Advisor: Assoc. Prof. Fatih Kamışlı

Auralis presents a lightweight, portable head-tracking system for virtual reality that operates without the need for external sensors. Using two cameras as a stereo camera and an IMU, the system estimates real-time 6-DoF head motion through Kalman Filter-based sensor fusion in a 2m x 2m indoor area. A compact headset and waist-mounted processor designed for the comfort of the user send motion data wirelessly to a computer, where a 3D head model mirrors the user’s movements on an external device’s screen.

BAYDES Tech

Advisor: Assoc. Prof. S. Figen Öktem

We’ve built a machine that classifies and redirects chicken products, aiming to provide a fast and cost-effective industrial solution. The system begins when the ESP32, Raspberry Pi 4B, and a remote computer establish a TCP connection over Wi-Fi. A camera mounted inside a black box—designed to minimize environmental interference—continuously captures images of the conveyor belt. These images are transmitted via TCP to the remote computer, where a ML based classification algorithm processes them. The classification results are buffered. When a chicken product triggers the distance sensor, the system applies majority voting on the buffered data and updates the GUI. The final decision is then sent back to the ESP32 over TCP. According to this result, the relevant servo motor positions itself and pushes the product into its assigned box.

Bit.io

Advisor: Prof. Dr. Ali Özgür Yılmaz

The need for antenna tracking systems arises from the requirement to establish communication links with long-range wireless devices, especially in situations where location data is unavailable or not ideal for transmission. As a result, these tracking systems play a crucial role in maintaining reliable communication in specific military and emergency. This project aims to develop a system capable of effectively tracking a moving target without depending on positioning data. The objective is to create a compact, efficient, and user-friendly solution emphasizing reliability and deployment ease.

Blue Owl Solutions

Advisor: Prof. Dr. Gözde Bozdağı Akar

The Inside-Out Tracking Sensor for Virtual Reality Applications project is designed to overcome the limitations of VR systems using external sensors. The aim is to provide a portable, cost-effective and immersive experience by developing an inside-out system that can perform precise and real-time 6-degree-of-freedom head tracking. The design uses head-mounted IMU and camera sensors. 6 DoF data is obtained using the camera with the ORB-Slam algorithm. The data obtained is enhanced using the IMU and Kalman filter. Communication between the headset and an external computer is done via Wi-Fi and UDP to maintain low latency. The data obtained on the computer is rendered using python VTK. In this way, head movement can be tracked in real time from the computer.

Clockwork Engineering

Advisor: Assoc. Prof. Sevinç Figen Öktem

The Walkway Non-Compliance Detection System is a real-time computer vision solution developed to enhance pedestrian safety in industrial environments. Utilizing live camera feeds, the system detects individuals who step outside designated walkway zones and responds with immediate visual and audible alerts. Built on YOLOv11 for object detection and ByteTrack for multi-person tracking, the system operates entirely on local hardware without relying on cloud services. A wireless alarm module is triggered when a violation occurs, while safety personnel receive real-time notifications via a cross-platform mobile application. The system also includes a user-friendly calibration interface, allowing users to define walkway zones either by entering HSV color thresholds or drawing custom areas. Each violation is logged with time, location, and tracking ID for later review. The modular architecture supports multi-camera setups and rapid deployment in both indoor and outdoor environments, making it a scalable and practical safety tool for modern workplaces.

DALGONA

Advisor: Assist. Prof. Hasan Uluşan

Team DALGONA presents the RF-based Antenna Tracking System (RFATS), a real-time platform that keeps a wireless connection with moving RF signal sources in environments where GPS is unavailable or unreliable. Instead of using location data, RFATS estimates the direction of incoming signals by comparing the phase difference between directional antennas. This information is then used to rotate the antenna system automatically, allowing it to follow the signal with high accuracy. The system achieves tracking speeds above 15°/s and maintains an angular error below 5°. Signal processing is performed on a Raspberry Pi 5 using C++, while motor control is handled by an Arduino Uno that drives a stepper motor for horizontal (azimuth) movement and a DC motor for vertical (elevation) adjustment. A user-friendly C# interface allows for easy monitoring and manual control. The system also includes a signal simulation setup for safe indoor testing. RFATS is compact, modular, and power-efficient, making it suitable for applications such as UAV communication, emergency response, and defense operations.

detEEct

Advisor: Assoc. Prof. Sevinç Figen Öktem

We developed an automated PCB defect detection system that identifies both bare PCB (Class A) and component-mounted PCB (Class B) defects with high accuracy and efficiency. A YOLOv8 model was trained and deployed specifically for Class A defects, achieving high recall and precision rates across various defect types and board sizes. For Class B defects, we implemented classical image processing techniques. The system operates via an integrated conveyor and imaging setup controlled by Arduino Uno and Raspberry Pi, enabling real-time image capture and analysis. It achieves processing rates of 8 bare PCBs and 2 assembled PCBs per minute. This cost-effective and scalable solution aims to reduce production losses, improve inspection reliability, and enhance manufacturing throughput. The project deliverables include the trained detection models, system hardware, user manual, and a GitHub repository.

DÜLDÜL

Advisor: Assoc. Prof. Fatih Kamışlı

This project aims to enhance workplace safety by ensuring individuals adhere to designated walkways in industrial environments. Unsafe movement outside marked paths can result in significant health and safety hazards. To address this, an automated monitoring system is developed using image processing techniques to detect violations in real time. Upon detection, the system issues a loud sound or visual alert to warn the individual and logs the incident—including time, location, and recorded visuals—for authorized personnel review. A mobile application is also integrated to provide real-time alerts and access to incident logs. The system supports quick setup and calibration to adapt to varying environments, such as different walkway markings, widths, and lighting conditions. It is scalable to cover large areas with multiple camera support and can operate using edge or cloud computing. The system’s effectiveness will be demonstrated in two distinct environments: an indoor corridor network and a large outdoor area, with performance measured using AUC scores of detection accuracy.

ElecTerra Tech

Advisor: Prof. Gözde Bozdağı Akar

ElecTerra Tech presents an inside-out 6-DoF tracking solution for

Virtual and Augmented Reality applications, eliminating the need for external infrastructure. The system consists of a wearable headset equipped with stereo cameras and an Inertial Measurement Unit (IMU), paired with a simulation environment powered by Unreal Engine. Sensor fusion is executed on a Raspberry Pi 5 (8GB), combining the high-speed responsiveness of the IMU with the spatial precision of the stereo cameras to estimate the user’s head position and orientation in real time. This hybrid fusion reduces drift and improves tracking accuracy in both rotational and translational motion, with error rates of approximately 1% and 10%, respectively. Designed for comfort and modularity, the headset weighs less than 250g. The system visualizes user motion wirelessly in a simulated 3D space, offering a cost-effective and portable alternative to traditional VR tracking setups. ElecTerra’s approach prioritizes usability, sustainability, and scalability across multiple immersive technology domains.

Electro Galaxy

Advisor: Prof. Behçet Murat Eyüboğlu

Negligence of walkway boundaries in busy industrial environments is a common cause of safety incidents. This system replaces manual monitoring with automated violation detection and real-time alerts. It is cost-effective, easy to install, and provides instant incident logs and notifications through a mobile app.

IP cameras stream to edge devices in a master-slave setup. YOLO detects people, DeepSORT tracks them across frames. Violations trigger logging, an audible alarm, and a mobile notification. The system adapts to various walkway layouts and syncs data across devices.

GangCS

Advisor: Prof. Gözde Bozdağı AKAR

This project presents the development of a Contactless Vital Sign Monitoring Device capable of detecting human presence and analyzing breathing patterns—classified as regular, irregular, or apneic—within a 2 × 2 meter area in under 300 seconds. The system utilizes an ADALM-PLUTO Software Defined Radio (SDR) in conjunction with Vivaldi and patch antennas for signal transmission and reception. Signal processing is performed using MATLAB to extract and interpret the respiratory data. To support testing and validation, a custom-built breathing simulator was developed, replicating chest wall motion using a rack-and-pinion mechanism controlled by an Arduino UNO. The simulator interface is implemented in C#. This comprehensive approach enables accurate and contactless monitoring of vital signs, with potential applications in healthcare and remote patient monitoring.

INSIGHT

Advisor: Assoc. Prof. Sevinç Figen Öktem

We designed a wearable inside-out tracking system that estimates the user’s 6-degree-of-freedom (6DOF) head pose in real time. The system uses two Raspberry Pi Camera Module 3 units for stereo vision and an Adafruit BNO055 IMU for motion sensing. A Raspberry Pi 5 performs onboard processing, running stereo visual odometry with ORB feature matching and PnP + RANSAC for translation estimation. IMU orientation and acceleration data are fused with visual odometry using a Kalman filter to improve stability and reduce noise. The system sends pose data wirelessly via WebSocket and supports real-time visualization in both RViz (3D) and a web dashboard (2D). It updates at 100 Hz with an average latency of 210±35 ms. Tests show that rotational error stays below 5% and translation error below 10% in isolated axis motion. The full setup costs approximately $296, weighs 930 grams, and operates for about one hour on a 5000 mAh battery.

mbyte

Advisor: Assoc. Prof. Elif Vural

We designed a wearable inside-out tracking system that estimates the user’s 6-degree-of-freedom (6DOF) head pose in real time. The system uses two Raspberry Pi Camera Module 3 units for stereo vision and an Adafruit BNO055 IMU for motion sensing. A Raspberry Pi 5 performs onboard processing, running stereo visual odometry with ORB feature matching and PnP + RANSAC for translation estimation. IMU orientation and acceleration data are fused with visual odometry using a Kalman filter to improve stability and reduce noise. The system sends pose data wirelessly via WebSocket and supports real-time visualization in both RViz (3D) and a web dashboard (2D). It updates at 100 Hz with an average latency of 210±35 ms. Tests show that rotational error stays below 5% and translation error below 10% in isolated axis motion. The full setup costs approximately $296, weighs 930 grams, and operates for about one hour on a 5000 mAh battery.

METU TECH

Advisor: Assoc. Prof. Elif Vural

This project presents the design and implementation of a real-time packaged chicken classification and redirection system for automated production lines. The primary goal is to classify different types of packaged chicken products—such as wings, drumsticks, whole legs, butterflied drumsticks, and breasts—with high accuracy and redirect them to the appropriate path accordingly. The system operates entirely on an embedded platform using a Raspberry Pi 4, ensuring low power consumption and independence from external computing resources. A camera module captures a continuous video feed of products on a conveyor, and the images are processed using a lightweight convolutional neural network based on the MobileNetV2 architecture. Based on the classification output, the Raspberry Pi controls a servo-driven redirection mechanism via PWM signals. The system has been designed to achieve at least 90% classification accuracy. This solution aims to improve the efficiency and reliability of poultry processing lines through intelligent automation.

METU VISION

Advisor: Prof. Behçet Murat Eyüboğlu

The Inside-Out Tracking Sensor Suite is a portable, low-cost, and marker-free 6-DoF head-tracking system designed for VR applications. Integrating two Pi Camera Module 3 units, a BNO055 IMU, and a TF-Luna distance sensor, it performs real-time pose estimation onboard a Raspberry Pi 5 using an Extended Kalman Filter. The fused pose data is wirelessly transmitted to a PC for 3D rendering. Housed in a lightweight helmet, the system achieves low latency (<20 ms), precise orientation (<1° error), and reliable positional tracking in a compact area without external infrastructure. Its modular and battery-powered design ensures flexibility and usability in mobile scenarios.

MYSTAB

Advisor: Assist. Prof. Hasan Uluşan

This project presents the design, simulation, and implementation of a Software-Defined Radio based Automatic Identification System communication framework tailored for low Earth orbit satellite reception scenarios. The system comprises multiple integrated subsystems, including a Python-based transceiver that acquires live vessel data from BarentsWatch, encodes it into ITU-R M.1371-compliant AIS messages, and transmits them through a MATLAB-driven SDR module utilizing the ADALM-PLUTO platform. The transmitted signals are subjected to a channel model incorporating Free Space Path Loss and Doppler shift to emulate realistic satellite propagation conditions. A parallel MATLAB module provides a fully simulated environment for development and testing. On the reception side, the system features a Python decoder and a real-time graphical interface that visualizes vessel positions on a dynamic map. The system was validated through extensive simulations and fatigue tests, demonstrating robust performance under varying channel impairments. Educationally, the platform offers a practical tool for exploring digital communication principles, SDR development, and maritime safety protocols. Safety precautions, minimal environmental impact, and compliance with RF guidelines were carefully considered throughout the design.

obserVR

Advisor: Assoc. Prof. Elif Vural

The obserVR project develops an affordable, portable, and real-time inside-out VR tracking system using stereo cameras and an IMU. Unlike conventional outside-in systems, obserVR eliminates external sensors, offering 6-DOF head pose tracking through onboard visual-inertial integration. A Raspberry Pi 5 processes sensor data and transmits it wirelessly for 3D visualisation via Unity. The system operates in a 2m×2m area, emphasizing modularity, low latency, and comfort with a head-mounted unit under 300g. The tracking solution achieves improved orientation and position estimation by combining camera depth data and IMU readings. A Python-based GUI integrates live metrics and video. With a six-month development schedule and a $300 budget, the project aims to enhance accessibility and usability in immersive VR applications across various fields.

ODOSAN

Advisor: Assist. Prof. Hasan Uluşan

In today’s hyper-connected world, critical communication links must stay locked even when GPS signals vanish or interference clouds the airwaves. ODOSAN’s RF-Based Antenna Tracking System (RFATS) meets that need with a self-steering receiver panel that listens for its target’s radio signature, calculates direction on the fly, and pivots smoothly to keep antennas perfectly aligned. By blending advanced signal processing, agile mechanics, and an intuitive control stack, RFATS secures long-range links without relying on external location data—and shrugs off jamming, spoofing, and multipath reflections along the way. Its compact, modular design slots easily into mobile platforms, field stations, and autonomous vehicles, laying the groundwork for resilient connectivity, precise asset tracking, and a new generation of RF-driven sensing solutions.

OSEAM

Advisor: Assoc. Prof. Elif Vural

The Inside-Out Tracking Sensor Suite project aims to develop a self-contained, wearable system that delivers precise six-degree-of-freedom (6-DoF) head pose tracking for virtual reality (VR) applications. Unlike traditional outside-in tracking systems, this solution integrates an inertial measurement unit (IMU), a monocular camera, and a 2D LiDAR sensor into a compact headset to achieve accurate, real-time motion tracking without external infrastructure. The system features sensor fusion algorithms that combine IMU-based orientation data with visual odometry and LiDAR-based spatial displacement, providing positional accuracy within ±6 cm and rotational accuracy under ±4°. A LiDAR-based collision warning mechanism enhances user safety by detecting nearby obstacles, while the ability to operate reliably in low-light or completely dark environments ensures broad applicability. Wireless communication transmits pose data to a Unity-based visualization interface with low latency, enabling immersive and responsive VR experiences. This project demonstrates a robust and affordable tracking solution, offering enhanced mobility, scalability, and usability for next-generation consumer and enterprise VR systems.

Ö’ler

Advisor: Prof. Gözde Bozdağı Akar

This project presents the design and implementation of a Software-Defined Satellite AIS Receiver system developed by the team Ö’ler. AIS (Automatic Identification System) is used for tracking ships by transmitting their position, identity, and other essential information. While shore-based AIS systems provide limited coverage, satellite-based reception enables wide-area maritime monitoring.

Our system consists of four main subsystems: Transmitter, Channel Modeling, Receiver, and Visualization GUI. AIS messages are generated, encoded, and modulated in the Transmitter. These signals are passed through the Channel Modeling subsystem, where Doppler shift, free-space path loss (FSPL), and additive white Gaussian noise (AWGN) are applied to simulate real-world satellite conditions. The Receiver captures, corrects, demodulates, and decodes the affected signals. Finally, decoded AIS data is displayed on a real-time, interactive map via the Visualization subsystem using Streamlit and Folium.

The modular, software-based structure of our system ensures flexibility, low cost, and future expandability for potential real hardware integration or advanced applications.

PARS

Advisor: Assoc. Prof. Sevinç Figen Öktem

Introducing PARS’s RF-Based Antenna Tracking System (RFATS), a next-generation solution for robust, long-range wireless communication without relying on GPS or satellite-based location data. Designed for dynamic environments, RFATS intelligently detects and follows pre-defined RF signals using a lightweight, motorized antenna array that automatically aligns itself with a moving transmitter in real time. By combining directional antennas with advanced signal processing, RFATS ensures uninterrupted connectivity even under hostile conditions like signal jamming or multipath interference. From defence applications to industrial automation and beyond, PARS’s RFATS redefines what’s possible in autonomous RF-guided communication: no GPS, no guesswork, just intelligent tracking.

PEC Devices

Advisor: Prof. Dr. Ali Özgür Yılmaz

Developed by PEC Devices, this system automates the classification and sorting of packaged chicken products using real-time computer vision and mechanical redirection. With a Raspberry Pi 5, YOLOv8 algorithm, and an integrated camera-light setup, it classifies five different chicken types—breast, drumstick, whole leg, wing, and butterflied drumstick—with over 85% accuracy. The system sorts items via servo-controlled arms on a gravity-assisted slide, achieving a throughput of up to 60 packages per minute. It is designed to reduce manual labor, improve consistency, and enhance quality control in poultry production lines. Its modular structure supports scalability, real-time monitoring, and future upgrades. The project demonstrates a low-cost, sustainable solution while combining embedded systems, AI, and mechanics. Built entirely by undergraduate students, this prototype reflects practical engineering skills and teamwork under real-world constraints.

PoultrAI

Advisor: Prof. Behçet Murat Eyüboğlu

This report presents PoultrAI, an integrated AI-powered system developed for real-time classification and sorting of five types of packaged chicken products. Aiming to address inefficiencies in manual poultry processing, the system combines three subsystems: a YOLOv8-based AI model , a low-latency electronics subsystem using WebRTC and WebSocket protocols, and a mechanical sorting arm designed for precise, high-speed operation. Tested under varying lighting and orientation conditions, the system demonstrated robust performance, with key challenges mitigated through image augmentation and structural refinements. The hardware configuration which is centered on Raspberry Pi 5 and Arduino NANO, enables efficient real-time communication and control. The mechanical subsystem, featuring telescopic, servo-driven arms, ensures accurate redirection of products at conveyor speeds up to 0.5 m/s. This automation approach reduces labor dependency, increases consistency, and aligns with broader trends in industrial AI adoption, offering a scalable solution for modern food production lines.

Revolver

Advisor: Assist. Prof. Hasan Uluşan

We built a portable continuous wave radar system which senses motion in the aimed direction. This radar can be used to detect chest movement due to respiration, and is tested to be successful up to 5 meters with various obstacles such as wooden doors and thin concrete walls, including respiration frequencies between 0.10-0.75 Hz. The system contains a classification algorithm which can distinguish 4 different patterns. The system also has a touchscreen GUI to provide ease of use for the user. Two 2×2 patch antennas are designed for the project for directivity. RF signal is generated by the ADALM-PLUTO SDR module. The signal processing is done via Raspberry Pi 4, and the overall system is powered via 3×18650 batteries of 2600 mAh capacity.

We also developed a mechanical chest simulator for testing, which can accurately simulate the chest motion during respiration. The simulator is powered with a power supply, and an Arduino UNO is used to drive the NEMA stepper motor.

Short Circuit Solutions

Advisor: Assist. Prof. Hasan Uluşan

The Walkway Non-Compliance Detection Project, developed by Short Circuit Solutions, is a workplace safety system which aims to monitor and enforce compliance with designated walkways in industrial environments. Utilizing computer vision and deep learning, the system detects individuals walking outside of marked walkways in real time. Compliant and non-compliant individuals are visually labeled, and non-compliance events trigger immediate audio alerts to warn the individual. Each incident is logged into a cloud database with time, image, and location data, enabling later review and analysis via a connected mobile application. The system is composed of three main subsystems: edge computing for real-time detection, a cloud infrastructure for data storage and synchronization, and a mobile application for incident monitoring. This integrated solution enhances occupational safety by promoting awareness and accountability in both indoor and outdoor workspaces.

SixPack

Advisor: Prof. Dr. Ali Özgür Yılmaz

The SixPack Inside-Out Tracking Sensor Suite is a wearable system that enables real-time 6-degree-of-freedom head tracking for virtual reality without relying on external infrastructure. It combines inertial and visual data to estimate head orientation and position, using a sensor fusion algorithm for improved accuracy and robustness. The system delivers real-time visualization and achieves high orientation accuracy, maintaining an RMS error below 10°. While translation errors in challenging scenarios exceeded the 30 cm target, the results remain suitable for typical VR applications. The lightweight, ergonomic design enhances user comfort, and the total system cost remains under $300, ensuring affordability. SixPack provides a portable, reliable, and cost-effective alternative to traditional VR tracking setups. Its modular structure supports future improvements and broader use in applications such as immersive learning, simulation, and training.

Spark

Advisor: Assist. Prof. Hasan Uluşan

This project includes a compact and wearable Inside-Out Tracking Sensor Suite designed for real-time 6-DoF head position estimation that can be used in virtual reality (VR) applications. As Spark company, we integrate two cameras, an inertial measurement unit (IMU) on the headset, and a Raspberry Pi 5 processing unit with a rechargeable and portable battery unit placed in a waist pack. The cameras use ORB-SLAM3 to fuse data from the IMU with data from the cameras using the Extended Kalman Filter. The combined data enables real-time and accurate tracking without the need for an external outside-in sensor. The head position data is transmitted wirelessly to the Unity on the external computer using the TCP protocol. This real-time tracking of the head position provides an immersive and responsive user experience. The product is optimized for low power consumption (3 to 4 hours supply), cost-effectiveness ($291), portability and reduced latency.

TempusTech

Advisor: Assist. Prof. Hasan Uluşan

Virtual-reality systems that rely on external cameras can be expensive, cumbersome, and immobile; this project counters those drawbacks by developing a fully self-contained, inside-out tracking sensor suite for head-mounted displays. A lightweight camera and six-axis IMU embedded in the headset capture visual and inertial cues that are fused in real time on a battery-powered single-board computer worn at the waist, yielding accurate 6 DoF head pose estimates throughout a 2 m × 2 m indoor play area. These pose updates are transmitted at low latency over a wired link to an external workstation, where a synthetic head model mirrors the user’s movements. Beyond realizing a compact, room-ready prototype, the project also benchmarks the benefits of sensor fusion and evaluates the design’s cost, weight, and user-satisfaction trade-offs—laying the groundwork for more accessible and portable VR experiences.

vEEsion

Advisor: Prof. Gözde Bozdağı Akar

This project presents a compact and low-cost inside-out tracking system for virtual reality (VR), eliminating the need for external tracking hardware. It estimates six degrees of freedom (6-DoF) head pose in real time using a Raspberry Pi 5, a monocular camera, and an IMU. A custom 3D-printed head-mounted assembly ensures precise sensor alignment, user comfort, and ease of use during extended sessions. Additionally, a 3D-printed custom Li-ion battery pack with a 5000 mAh capacity provides reliable performance for at least three hours, enabling fully portable and standalone operation. The estimated pose is transmitted wirelessly to an external computer, where a virtual environment is rendered in real time, allowing immersive interaction based on head movement. The system leverages the OpenVINS visual-inertial odometry framework within a ROS2 environment to achieve efficient sensor fusion, real-time performance, and modular communication between components. The system has been fully integrated, calibrated, and tested. The final product is a scalable, extensible, and affordable tracking solution for VR applications. The complete setup costs approximately $280, and the headset weighs 290 grams (excluding the battery pack).

Voltminds

Advisor: Prof. Behçet Murat Eyüboğlu

We developed a head-mounted 6-DoF tracking system that provides accurate real-time pose estimation for VR applications using inside-out tracking. The system integrates a Bosch BNO055 IMU, a USB camera, and dual ultrasonic sensors, all mounted on a helmet. Data from these sensors is processed on a Raspberry Pi 5, where sensor fusion is performed using a Kalman filter. This fused pose information (x, y, z, yaw, pitch, roll) is wirelessly transmitted to an external computer via TCP over Wi-Fi. The external device visualizes the user’s head movement using a 3D rendered model in real time. Our goal was to eliminate external tracking hardware and deliver a cost-effective, portable solution that functions reliably within a 2×2 meter space. Extensive testing demonstrated ±5 cm positional accuracy and ±1° rotational precision under typical indoor conditions. The system is ideal for educational, rehabilitation, and low-cost VR environments.

VoltRun

Advisor: Prof. Behçet Murat Eyüboğlu

We have developed a radar that can detect life in a 2×2 closed room. This room is surrounded by a curtain and the system works without contact. We send RF waves towards this room using Adalm pluto and process the reflected wave and convert it into meaningful data. In this project, which can be used in military and medical applications, we can detect a living being in an enclosed area, as well as observe the breathing type (fast, slow or apnea) and bpm of this living being. This entire system is monitored via a useful and simple GUI. We have also developed a simulator that can imitate human breathing for use in tests. We can perform 3 different modes using a microprocessor and actuator. In addition, the reflected object consists of an agar-salt mixture with human-like permittivity.

Watt’s Up

Advisor: Assoc. Prof. Fatih Kamışlı

TrackSphere is a head-mounted, inside-out VR tracking system capable of real-time 6-DoF (degrees of freedom) motion estimation, tracked using an IMU, an array of ultrasonic sensors, and a camera. The sensor data is fused on a Raspberry Pi 4 and is displayed in real time through a three.js 3D render on an external laptop. The system aims to offer low-cost, wearable, and modular options in place of currently used external VR tracking systems.

Eyes: IMU + Ultrasonic Sensors + Camera (<5% error)

Body: Power bank, gimbal structure are attached to the frame which is placed on the helmet.

User Interface: Web ServerCost: 186 USD

In this introduction video, we present our innovative system, showcasing the server interface to illustrate its functionality, along with real-time outputs and results for various movements. The system is placed on a helmet, which is characterized by a gimbal mechanism to ensure stable and accurate sensor data. Watch the video to see the system in action and explore its performance!

Wired & Inspired

Advisor: Assoc. Prof. Fatih Kamışlı

Packaged Chicken Classification System (PCCS) is an automatic system based on computer vision and mechanical control for classifying packaged chicken parts. A laser–LDR sensor pairing detects incoming packages and starts image capture by a camera operated by a Raspberry Pi. The captured images are subsequently classified using a pre-trained EfficientNet-B4 model operating on a PC, displaying results in the form of a GUI and logging data to be processed. On classification, the system activates servo-controlled sorting arms and a trapdoor system to push the packages into their respective bins. End-to-end real-time processing of greater than 90% general classification accuracy is facilitated by the synergy of hardware and software components. PCCS provides a cost-effective and scalable way of removing human error, improving efficiency, and reducing labor costs in food packaging.

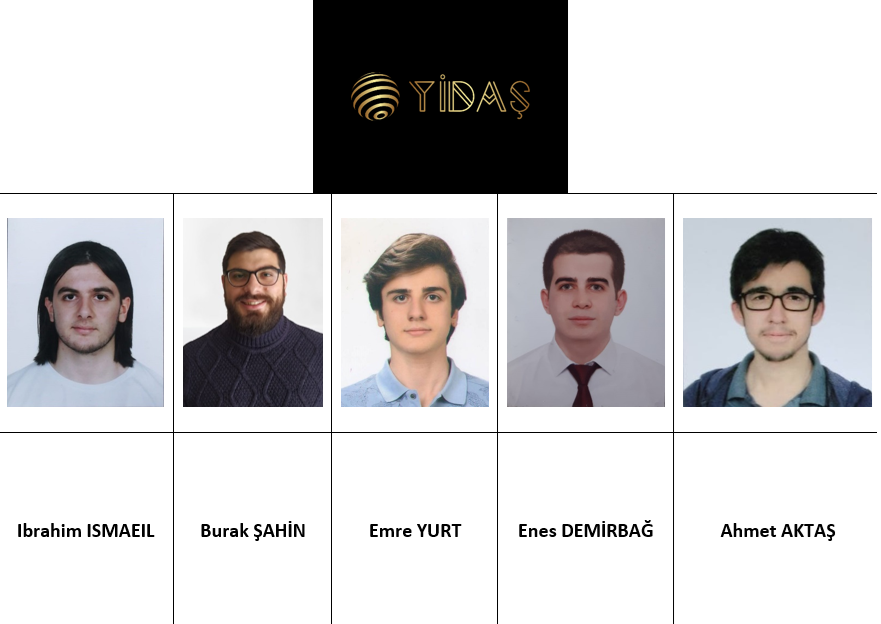

YİDAŞ

Advisor: Prof. Ali Özgür Yılmaz

The YİDAŞ project presents a fully automated system for detecting defects in both bare and assembled Printed Circuit Boards (PCBs), a critical need in modern electronics manufacturing. The system integrates a custom-built dual-speed conveyor, industrial-grade imaging hardware, and advanced computer vision algorithms to achieve real-time, high-precision fault detection. Type-A defects on bare PCBs are identified using a YOLOv8-based deep learning model, while Type-B defects such as missing or misaligned components are detected through region-based analysis. Additional methods, polarity verification, address complex issues like tombstoning and reverse mounting. The system features synchronized control via Arduino and PC-side software, with an ergonomic user interface and safety protocols. Performance tests confirm that the system meets industrial standards for detection speed, precision, and reliability. Through its modular architecture and cost-effective implementation, YİDAŞ offers a scalable solution for improving quality assurance in PCB production environments.